Deep Learning Fundamentals - Part 5: What is an activation function in an artificial neural network?

In this fifth video of the Deep Learning Fundamentals series, we’ll be discussing what an activation function is and how we use these functions in neural networks. We’ll also discuss a couple of different activation functions, and we’ll see how to specify an activation function in code with Keras.

In a previous video, we covered the layers within a neural network, and we just briefly mentioned that an activation function typically follows a layer. So let’s go a bit further with this idea.

Alright, so what is the definition of an activation function?

In an artificial neural network, the activation function of a neuron defines the output of that neuron given a set of inputs. This makes sense given the illustration we had in the last video.

There, we took the weighted sum of each connection that pointed to the same neuron in the following layer, and passed that weighted sum to an activation function. The activation function then does some type of operation to transform the sum to a number between some lower limit and some upper limit.

So, what’s up with this transformation? Why is it even needed?

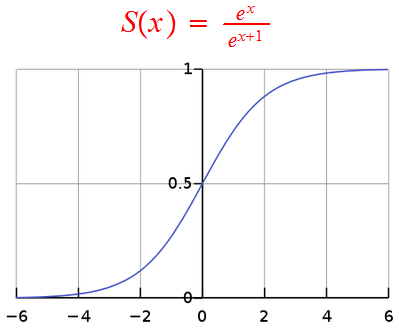

To explain that, let’s first look at an activation function called Sigmoid.

Sigmoid takes in an input, and if the input is a very negative number, then sigmoid will transform the input to a number very close to zero. If the input is a very positive number, then sigmoid will transform the input into a number very close to one. If the input is close to zero, then Sigmoid will transform the input into some number between zero and one.

So, for Sigmoid, zero is the lower limit, and one is the upper limit.

Alright, so now that we understand mathematically what one of these activation functions is doing, let’s get back to our previous question of why are we doing this? What’s the intuition?

Well, an activation function is biologically inspired by activity in our brains, where different neurons fire (or are activated) by different stimuli. For example, if you smell something pleasant, like freshly baked cookies, certain neurons in your brain will fire and become activated. If you smell something unpleasant, like spoiled milk, this will cause other neurons in your brain to fire.

So within our brains, certain neurons are either firing, or they’re not. This can be represented by a zero for not firing or a one for firing. With the Sigmoid activation function in an artificial neural network, we saw that the neuron can be between zero and one. So the closer to one, the more “activated” that neuron is. The closer to zero, the less activated that neuron is.

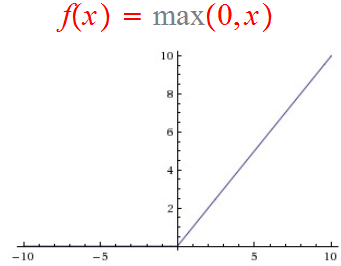

Now, it’s not always the case that our activation function is going to do a transformation on an input to be between zero and one. In fact, one of the most widely used activation functions today, called ReLU, doesn’t do this. ReLU, which is short for Rectified Linear Unit, transforms the input to the maximum of either zero or the input itself.

So if the input is less than or equal to zero, then ReLU will transform the input to be zero. If the number is greater than zero, ReLU will then just output the given input. The idea here is, the more positive the neuron is, the more activated it is.

Now, we’ve only talked about two activation functions here, Sigmoid and ReLU, but there are many different types of activation functions that do different types of transformations to their inputs.

Let’s take a look towards the end of the video at how to specify an activation function in a Keras Sequential model.

Previous videos in this series: