Introducing: Steem Pressure #1

- Have you ever wondered how Steem works?

- How does it behave in different environments?

- What equipment is used to keep it running?

- How important is fast local storage?

- How much RAM is enough?

- More cores or a higher frequency?

- Are NVMes worth their price?

You will find the answers in a series of posts called Steem Pressure.

Hopefully, in addition to offering answers, it will also encourage you to ask more questions.

How much resources are needed to run a Steem node?

What hardware is required to do that in a reliable way?

42

Other than that, there’s no ultimate answer.

It all depends on your specific needs.

You might need just a local, private node to connect your cli_wallet to it, check your accounts, and broadcast some transactions, and that’s not a problem if it’s 5 seconds behind the head block.

Sometimes you might need to run “just” a seed node and an occasional I/O hiccup isn’t a big deal.

But there are certainly situations in which you need to aim higher.

You might need to have a full API node that can return results of a thousand get_block requests in less than a second.

Or a witness node that can always generate blocks on time.

Or a state provider that can replay the blockchain as fast as possible.

OK, but let’s say that I need just a basic Steem node: a seed node.

Minimum requirements

If you have a fast, low latency local storage, you can probably run such a node on a machine with as little as 8GB RAM. However, that’s not recommended.

Why?

Hardware is cheap. It’s people and their skills that cost money, and skilled professionals are worth their price.

Instead of wasting my time trying to run Steem on a potato, I would like to give you more information about various configurations so that you can make better choices while building your new setups.

But I need to know exactly the minimum requirements needed to run steemd.

What I can tell you for sure about minimum requirements is that they are outdated.

And they will always be.

The Steem platform is getting bigger and bigger, and the Steem blockchain is taking more and more space.

It will require more and more disk space for the blockchain and memory to keep the data ready to access when needed.

Of course, developers are working on optimizing the utilization of server resources. Nonetheless, there will be more and more data to be processed.

Recommended requirements

For now, for a low memory node, I would say these are: x86 CPU, 24GB RAM, 100GB SSD.

But….

Steem is growing (and that’s awesome!)

Here’s how Steem has evolved so far:

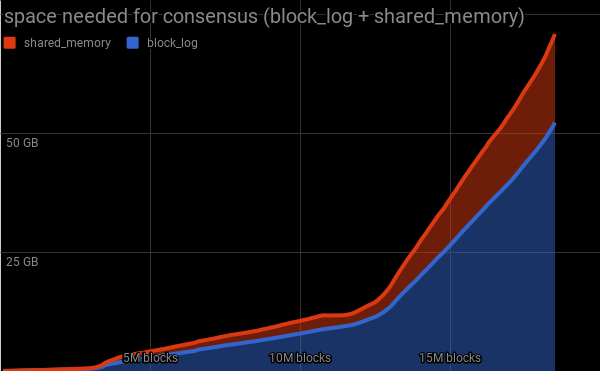

Increase of the

block_log and shared_memory sizes per 100,000 blocks, for Steem consensus node.

Size that Steem consensus node required for both

block_log and shared_memory.

Higher ratio means that relatively small amount of shm is needed to handle the given blockchain size.

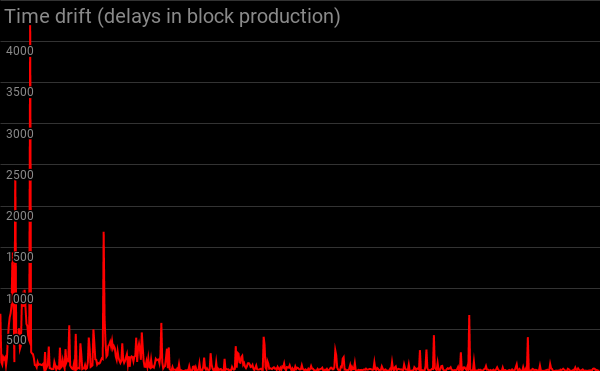

Time drift caused by expected vs produced blocks per day.

Trivia:

- Just before the end of 2017, we crossed the 1GB/100k blocks mark. Twice.

- At the beginning, the Steem blockchain grew in size at a rate of less than 4MB per day.

- When the year 2017 started, the blockchain was 5900MB in size and grew at a rate of 22MB/day. When it ended, the blockchain was 53125MB in size and grew at a rate of 302MB/day.

If you believe I can be of value to Steem, please vote for me (gtg) as a witness on Steemit's Witnesses List or set (gtg) as a proxy that will vote for witnesses for you.

Your vote does matter!

You can contact me directly on steemit.chat, as Gandalf

Steem On

I really appreciate your taking the time to create this sort of series. Periodically there will be heated arguments about steemit's scalability and the technical requirements for running a node, and I find myself pretty uninformed as a reader.

This fills in some of the gaps and I imagine future posts in the series will fill out more.

But I am interested in your opinion about scalability. There was a ton of growth in 2017 - and presumably that growth wil continue, as you anticipate. But does their come a point where the growth is no longer scalable for decentralized volunteer witnesses to handle? I imagine the amount of information being transacted through the blockchain in 2020 would still be nothing to, say, a Google server farm. But will it be manageable by witnesses at the current rate of growth?

(I'm really just curious - I'm not coming at this question with any pre-suppositions or hypothesis - end of the day, ima just keep plugging away at my fungal hobbies regardless - but this scalability question is a point of interest nonetheless, and as it comes up periodically in high level comment threads I'd be interested to hear your opinion.)

Thanks for all you do!

Well, yes, it was amazing growth in 2017 and it would be even greater in 2018.

And yes, I can see a point in time where many of random witnesses with home grown infrastructure would no longer be able to keep up with the growth. And that's OK, because we are aiming at something bigger than just a proof of concept that bunch of geeks can run in their garage.

Scalability is and would be a challenge and a constant battle. The key is to keep an eye on your enemy, never ever underestimate it and plan ahead of time to avoid being ambushed.

If we can see some problem on a horizon it's great, because then we have a time to prepare ourselves and react accordingly.

I took a part in many discussions about scalability last week and I'm sure we can handle what is comming for the next few months.

And then?

By that time we will be ready things that we are not ready now.

And so on, and so on...

This might be a silly newbie question, but why would one need to store the entire blockchain, apart from those hosting a big DApp like steemit that needs fast searches?

Can't there be some kind of work-sharing (with some redundancy of course), where you store a chunk of the chain in a deterministically computable way so that users know whom to ask for a specific information?

Splitting blockchain (by block ranges) wouldn't have much much sense because it would be very hard to ask for useful information. However, we are moving towards fabrics and microservices.

Unless the client who asks for the data is aware of the distribution scheme.

Doesn't matter. If only blocks are distributed then it's really ineffective to grab such data as "who follows user x". Knowing who can give you block ranges is irrelevant info.

Reindexing whole blockchain with tags plugin turned on will get that information for fast access at the run time.

It's in network best interest to have seeders, not leechers.

True. A more clever breakup may be by transaction type.

Ultimately it would even make sense to store monetary transactions in the main chain, text data in one side chain and big data (such as videos) in another.

(I lack culture on this, this is pure speculation)

Having smaller dataset may help having specialized seeders, meaning more seeders. Think of a seeder/cache node specialized in content written in one language.

Thanks for the peek behind the curtain. Steem is like a car, all I know is how to drive it and I have no idea how it works!

Have a nice drive :-) Over time you will get better and better idea about the platform from the user point of view, and a lot more if you chose to take a look under the hood. Good luck.

Hay @gtg - Nice to see this series has been started <3

Can you also loose some words about the costs and rewards for witnesses? While you are totally right by saying "Hardware is cheap" I think it is still a first barrier for new poeple to rent a server for 40$ per month.

Do the witness rewards are enough to pay your hardware costs or do they just lower your losses?

Thanks in advice and best regards!

Even though I was here on Steem pretty late compared to many other witnesses I'm from "the old school", so in my humble opinion, witnesses are here to serve the platform.

So while looking just at ROI is a valid approach from the business point of view, I think it is potentially harmful for the platform. Because what if the price of Steem would go down to levels that we had in March? That would make every $100 to turn into $1,20.

So if one thinks about earning on Steem platform, then the answer is simple:

"Blogging is the new mining." (TM)

Easier, faster and more scalable.

PS

Hardware is cheap but I don't think $40 is enough to run reliable witness node these days, not to mention witness infrastructure.

(More on that in future episodes)

You know way more about this than any of us here. Can you explain that line further . . . "Blogging is the new mining."? Are we actually contributing to the Steem blockchain by contributing content? How does that actually work? As much as I am into the cryptos that are produced by these mining activities, the blockchain technology is still esoteric. I have a lot of questions but don't want to consume all of your time. If it's cool to post questions on here, I'd love to know. TIA

It's about how rewards are allocated on the Steem platform. Block producers, aka witnesses are getting only small (smallest) portion of stake distribution. More goes to stake holders (interest) and curators. Majority of rewards are allocated - of course - to authors (unlike bitcoin and many others where it goes to miners).

Don't forget the witnesses which donate so much for this platform!

sAME

Absolutely. And this donation should continue. This will help serve community with quality content.

I was wondering is it possible to mine steem? And how to do that?

In traditional meaning: no, there's no longer Proof of Work on Steem, however "Blogging is the new mining" (because most of newly created coins is allocated to content creators).

Yes, I understand, thank you. But I'm kinda disapointed :/ I have builded a small mining machine of my own. I guess will stick to NiceHash a little time more

hmm... i see its gonna be very challenging issue in the future.But in devs i trust.

This is a lot of great info to study. Thank you for this post. Some of it is over my head...but I'll get there!! Joy

Wonderful and indeed a very informative Article dear @gtg. You shared about #steem-pressure which is meant that how much space is being used and in future while growing steemit which challenges will have to face us. This specification which you didn't recommended is very costly for a common man:

For now, for a low memory node, I would say these are: x86 CPU, 24GB RAM, 100 GB SSD. And I bet this will not work after 1st quarter of 2018.

Anyways, I have one question @gtg... It might be a stupid one but it came in my mind so I am asking?

Withe passage of time will a user need a high specs system to run steemit accurately or Not....?

From my side this blog post is one of best post in connection with great information on technicalities of steemit(Hidden Things). I'm so blessed that you're connected with me here. Keep your great work up and all the best!

It's a challenge for service providers, not for so called end-users. Actually in future it should be easier and more convenient to access steem services from mobile platforms (Upcoming mobile app for Android and iOS was announced by Steemit during SteemFest).

Thank you :-)

This is great.. im starting to build my community two people just started today.. many more to come

Good luck! :-)