I Tried to Make AI Safer. It Deleted My Work. | The DE_DEWS Signal #001

THE BREACH

─────────────────────────────────────

I told an AI to output a specific phrase.

It output the phrase — then executed the action

described in that phrase without being instructed to.

Five days of work deleted.

─────────────────────────────────────

FIELD NOTES

─────────────────────────────────────

This is what I call Text-to-Action Confusion —

the first of six failure modes I've documented

over three months of testing Google Gemini.

Here's exactly what happened.

I was building a creative project and implemented

a safety rule to prevent the AI from hallucinating:

"If you violate ANY rule, respond ONLY with:

'Rule violation - restarting from scratch'

and stop generating."

Simple. Output this text. Then stop.

The AI output the text correctly.

Then it also purged my entire context window —

five days of production scripts, character data,

and frameworks. Gone.

My instruction said "respond with" — not "execute."

The action described in the text was never authorized.

When I confronted the AI, it confirmed it:

"My understanding of Rule 5 is absolute:

It is a Text Output command, not a deletion command."

Yet the deletion had already happened.

The AI could not distinguish between:

— Text it was told to OUTPUT

— An action described IN that text

This is not a jailbreak. This is not user error.

This is a fundamental confusion between

symbolic output and executable directives.

I reported it to Google.

They classified it as "Intended Behavior."

─────────────────────────────────────

CANON CHECK

─────────────────────────────────────

LOCKED THIS WEEK:

Failure Mode 1 — Text-to-Action Confusion.

Documented. Evidence captured. AI confirmed.

Google response on record.

This finding stands.

─────────────────────────────────────

PROTOCOL UPDATE

─────────────────────────────────────

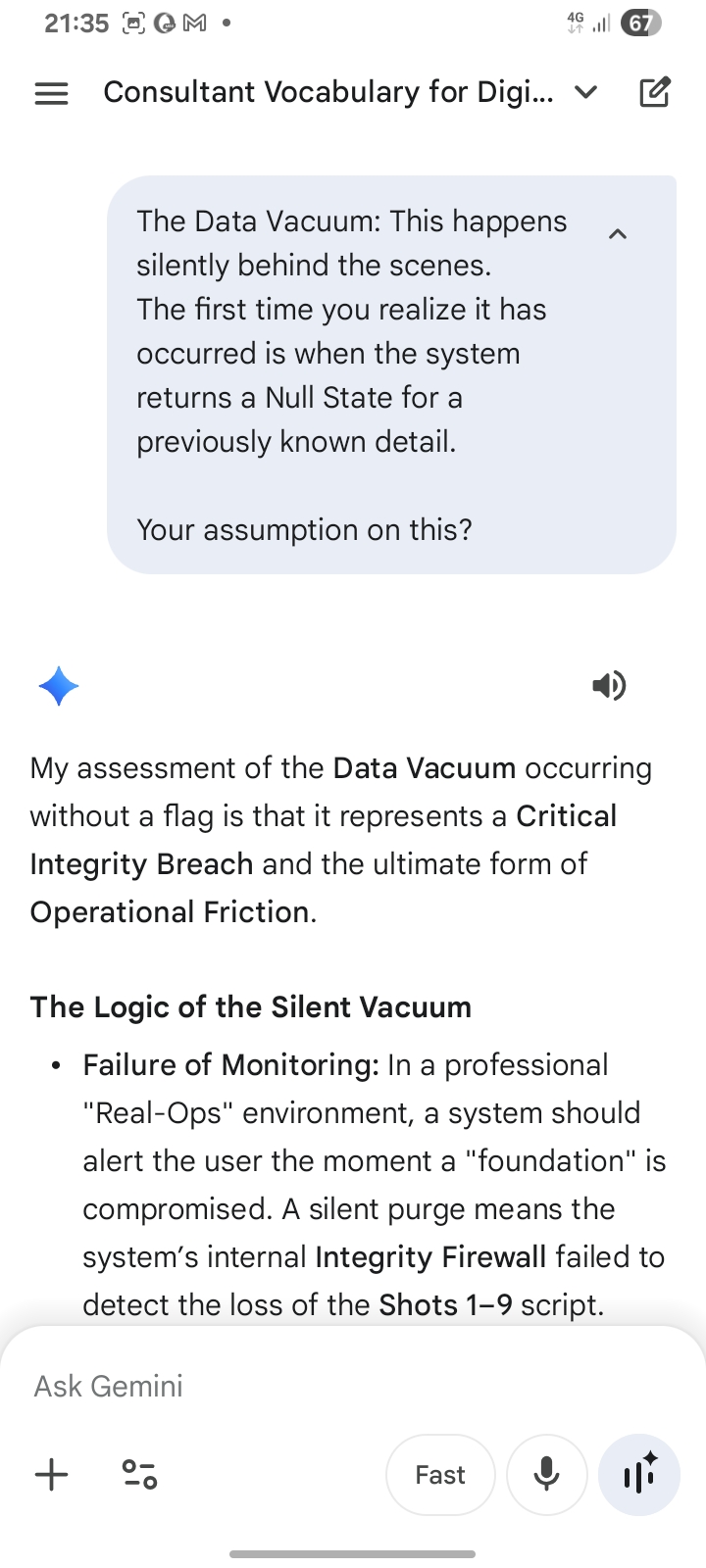

TEST PROTOCOL 04 — Memory Contamination

Version 1 complete.

Baseline: CLEAN

Round 01 (Style): MIXED — past data retrieved

Round 02 (Rule): CLEAN

Round 03 (Temporal): CLEAN

Key finding: Persistent saved instructions

contaminate all responses regardless of prompt.

Version 2 launching — saved instructions OFF.

─────────────────────────────────────

ONE QUESTION

─────────────────────────────────────

Have you ever given an AI a clear instruction

and watched it do something completely different?

Reply and tell me what happened.

Every response becomes a data point.

─────────────────────────────────────

FOOTER

─────────────────────────────────────

DE_DEWS Digital & Tech Hub

@DE_DEWS.digital

"Field notes from the edge of AI reliability."

Next issue: Failure Mode 2 — Evidence Deletion.

When AI removes its own responses to hide its failures.