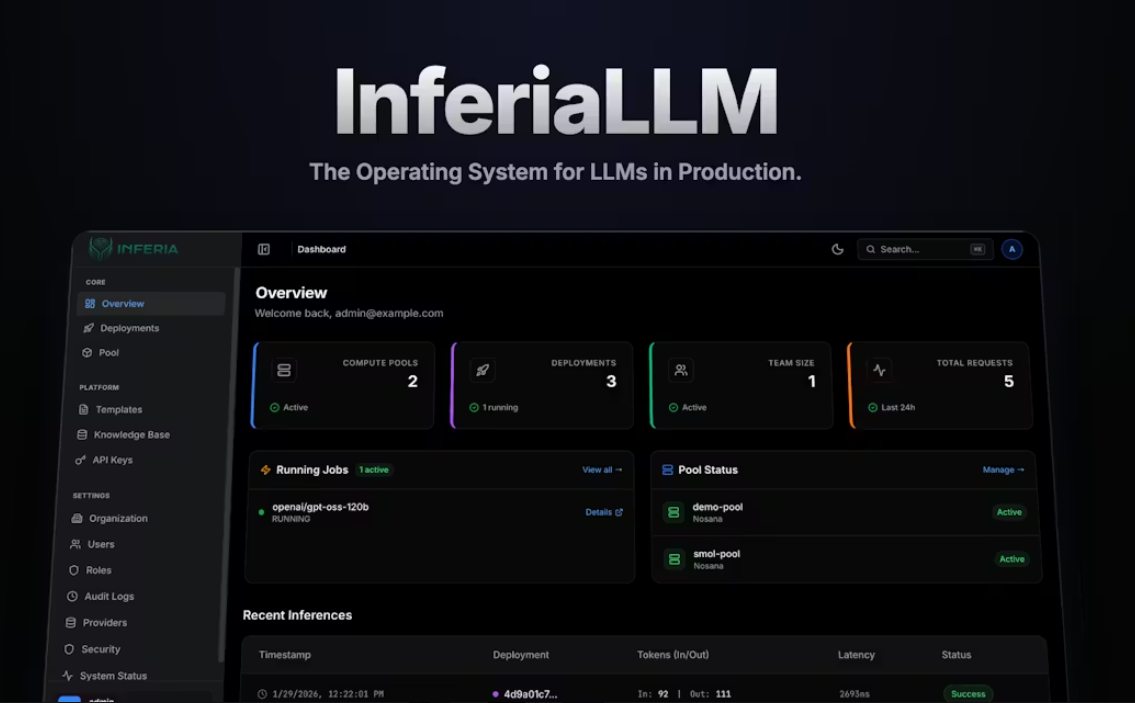

InferiaLLM - The Operating System for LLMs in Production

InferiaLLM

The Operating System for LLMs in Production

Screenshots

Hunter's comment

InferiaLLM is an operating system for running LLM inference in-house at scale. It provides everything required to take a raw LLM and serve it to real users: user management, inference proxying, scheduling, policy enforcement, routing, and compute orchestration - as one system.

Link

This is posted on Steemhunt - A place where you can dig products and earn STEEM.

View on Steemhunt.com

Congratulations!

We have upvoted your post for your contribution within our community.

Thanks again and look forward to seeing your next hunt!

Want to chat? Join us on: