Artificial Neural Networks

Man has been characterized by constantly seeking new ways to improve their living conditions. This has served to simplify the work in those tasks where force is included. The results obtained have allowed directing these efforts to other fields, such as the design and manufacture of machines to solve math problems, this will be done in a fast and efficient way to help solve problems that can be tedious when it comes to doing it by hand.

Charles Bobbage tried to build a machine that could solve mathematical problems, and later many others tried to do it too, but electronic instruments were already available for the Second World War, and that's when the first advances began to be seen.

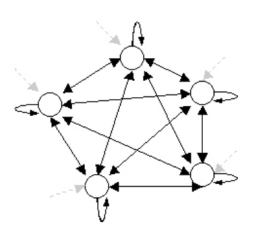

Figure 1. Hopfield Network

I want to thank the people who have supported and helped me in my personal growth @hogarcosmico @bettino @rchirinos @annyclf @paolasophiat @luisrz28 @jesusrafaelmb @erika89 @rubenanez @natitips @hendrickjub

Artificial intelligence tries to discover and describe aspects of human intelligence in order to be simulated by machines. This area has been developed in recent years in a very strong way, where we can see its application in these fields: artificial vision, theorems demonstration, information processing expressed through human languages, among others.

Neural networks are a way to emulate characteristics that only humans possess, such as the ability to memorize or associate facts. If we analyze all the problems that we cannot express through an algorithm, we would notice that all have a common characteristic that is experience. Man is able to solve this type of situation using the experience he has acquired. We can say that neural networks is an artificial and simplified model of the brain and its basic processing unit is inspired by the neuron that is the fundamental cell of the nervous system. The thought takes place in the brain which is composed of billions of neurons that are interconnected. Regardless of how we define intelligence, it is said that intelligence lies within the interconnected neurons and the interaction they have with each other. Humans are able to learn and we can associate learning with those problems that we could not solve initially, but they can be solved after having acquired information about the problem. In summary, neural networks consist of processing units that exchange data or information.

Definitions of Neural Networks

Like the AI, neural networks have many definitions, which we can highlight these three definitions:

- A new form of computing, inspired by biological models.

- A mathematical model composed of a large number of procedural elements organized in levels.

- Networks interconnected massively in parallel of simple elements (usually adaptive) and with hierarchical organization, which try to interact with the objects of the real world in the same way as the biological nervous system does.

Advantage

- Learning: Neural networks have the ability to learn to perform tasks based on a training or an initial experience.

- Self-organization: Neural networks create their own organization or representation of information inside which receives through a stage of learning.

- Fault Tolerance: Because a neural network stores information redundantly, it can still respond in an acceptable way, even if it suffers great damage.

- Flexibility: A neural network can handle unimportant changes in the input information.

- Real time: The structure of a neural network is parallel, so if they are implemented by computers or with special electronic devices, answers can be obtained in real time.

Structure of the Neural Networks

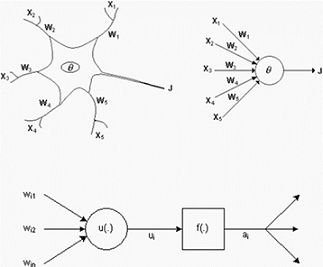

A neuron is the basic unit of the network and we can describe it by comparing it with a biological neuron, since the operation will be similar.

In the upper part of the image we see a biological neuron, this one is formed by synapses, axons, dendrites and body. In the lower part we have an artificial neuron that is an information processing unit, it is a simple calculation device that before a vector of inputs provides a single output.

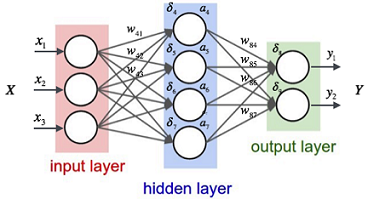

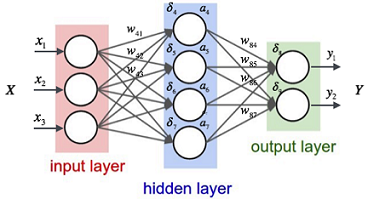

A neural network is made up of interconnected neurons and grouped in 3 layers (the layers can vary). The data enters through the input layer, which pass through the hidden layer and exit through the output layer.

Knowing that the neuron is the basic unit of the network, we can define a neural network as mathematical models inspired by biological systems, adapted and simulated in conventional computers.

Classification

1. According to its Architecture:

The architecture of the network consists of the connections and arrangement of the neurons and we can distinguish in a network the layers, the type of layers that have either hidden or visible, input or output and the direction of the connections of the neurons.

They are divided into:

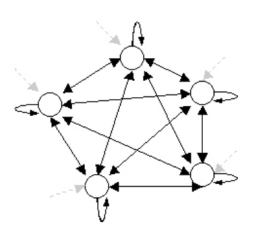

- Monolayer networks: It is composed of a layer of neurons which exchange signals with the outside and constitute at the same time the entry and exit of the system. The Hopfield network is the most representative of this network model.

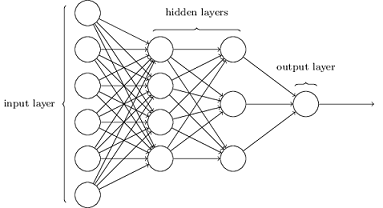

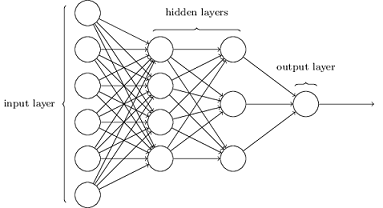

- Multilayer Networks: Are those that are composed of one or more layers of neurons connected between them. Depending on the connections, they can be divided into:

- Networks with forward connections: Contain only connections between forward capable which implies that a layer could not have connection with one that receives signal before it.

- Networks with backward connections: They contain connections between capable backwards, therefore the information can return to previous layers.

Figure 2. Multilayer Networks

2. According to its Learning:

The learning is based on the training of the network through patterns and that the network executes these patterns in an iterative way until they show me satisfactory answers, that is, the synaptic weights are adjusted to give optimal answers for the set of training patterns. We can distinguish 3 types of learning:

- Supervised Learning: The network has the input and output patterns that are needed to obtain that input and according to them the weights of the synopsis will be modified in order to adjust the input to the output.

- Unsupervised Learning: This consists of not providing the output patterns to the network, but only the input ones and letting the same network qualify them based on common characteristics that the network finds in them.

- Hybrid Learning: In this the objective patterns are not provided, it is only said if the answer is correct or failed before an entry pattern.

Applications

Neural networks can be used in a wide variety of applications, both commercial and military. Neural networks can be developed in a reasonable period of time, with the ability to perform specific tasks better than other technologies. When implemented by hardware, they have a high tolerance for system failures and provide a high degree of parallelism in data processing. This makes it possible to insert low-cost neural networks into existing and newly developed systems.

- Book: Artificial Intelligence a modern approach, second edition. Stuart J. Russell & Peter Norvig.

It is great to know everything about our brain, excellent work friend @merlinrosales96. Regards ; )

Thank you my friend, regards.

This post was upvoted by Steemgridcoin with the aim of promoting discussions surrounding Gridcoin and scientific endeavors.

This service is free. If you want to help the initiative, feel free to upvote this comment or click here to learn how to delegate.

Have a nice day.

Disclaimer: This account is not associated in any shape or form with the official gridcoin devs team.